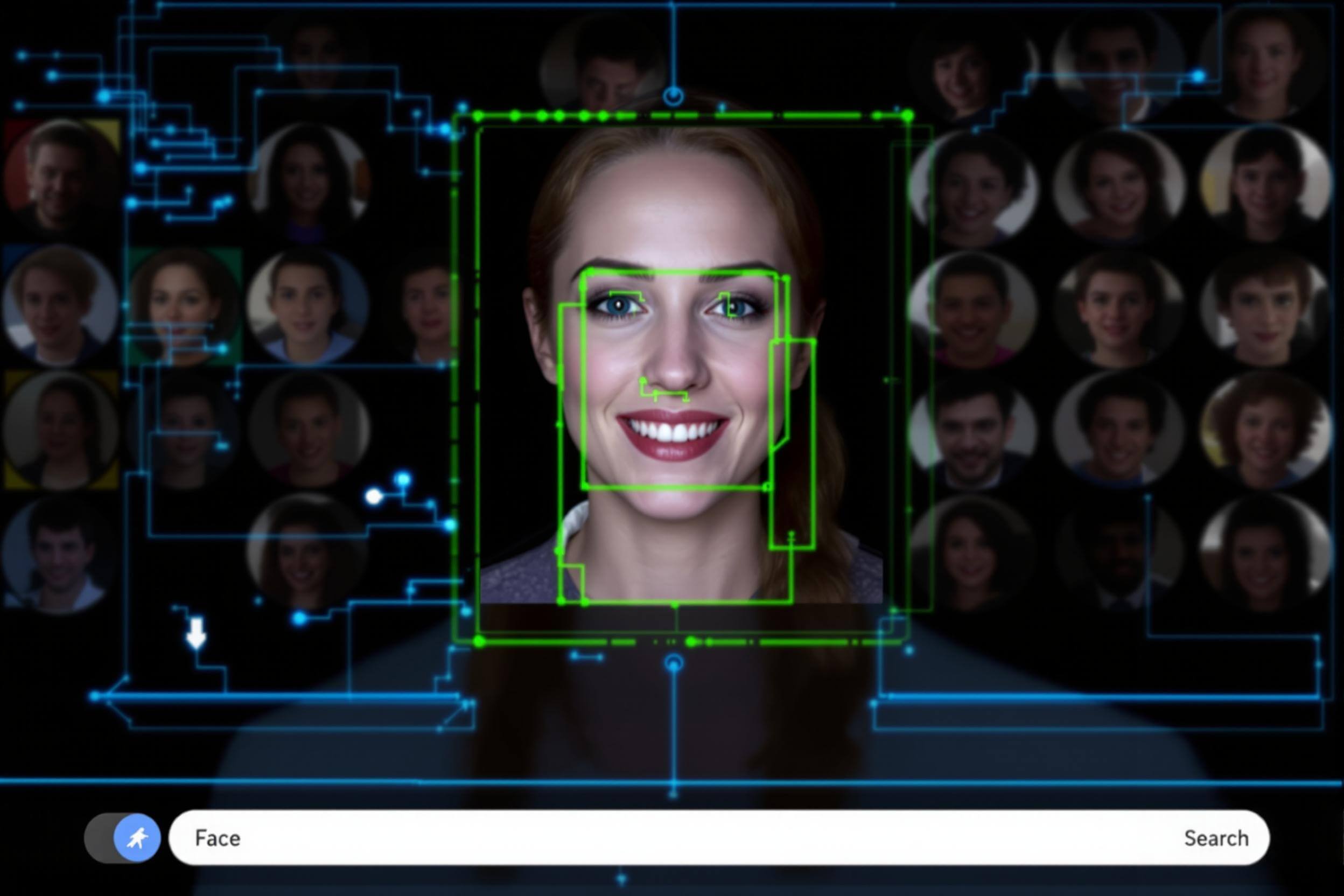

Face Search and Dupe Fraud Detection for ID Verification

An important anti-fraud measure is searching for duplicate faces during ID verifications. It is referred by a few names, such as 1:N face verification (Face Recognition Technology Evaluation (FRTE) 1:N Identification), face search, or face deduplication. This method helps detect repeat signups or other abusive behavior that tries to evade your verification checks. For example, you may be a gaming site that provides a $500 bonus for each new signup. However, you start to notice the same people are signing up with new information. They are smart enough to evade the basics such as IP, device, and ID attributes, but their face remains the same. Changing one's face is more complex, especially if you do the verification through a live method that prevents deepfakes. In this case, their facial biometrics would remain similar even if the user had completely separate identities. There is little they can change as faces are difficult to modify without real-life surgery. They might try to add some obfuscation like glasses, a fake mustache, or more, but when using a robust biometric system, it can map a person's face extremely accurately. A fake ID with their face swapped would link them across multiple identities. Any verification service should have this option to detect fraud. It should look at duplicate facial biometrics and other attributes such as document numbers, names, DOBs, and more to find common data points.

There are a few use cases for facial searching, but we focus mainly on fraud prevention. Other examples include security camera tracking for repeat retail fraud, access control to verify employee access to an office, banking for in-branch withdraws, and government for secure access to a site. All these examples require a facial database to register and store a vector for a face for later retrieval and similarity scoring. For example, a person may register their face before accessing an office. Upon arrival, a security guard can take another photo to verify their identity, which already exists in the system, for access approval.

When tracking known faces, it is also vital to stay in compliance with applicable laws and regulations for privacy. Certain regions prohibit this type of tracking, so this feature should be enabled selectively for fraud prevention if allowed. Another key aspect is ensuring that the face database is not shared with other businesses or services. Trust Swiftly makes sure every face is completely siloed, and there is no option for creating a central tracking method that could later identify a person. Some services sell to governments that create massive tracking mechanisms with billions of data points. Suppose you have ever traveled or passed a camera. In that case, there likely is a record of your identity in a database somewhere, which makes it easy to track any movement and identify people well before they reach a particular destination. As seen in the NIST testing, many of the solutions are Chinese-based, which has rolled out this tracking the fastest and most accurately, but other countries are not far behind or public about it.

Face searching can be easily abused, so selecting a solution that understands these risks is critical. Putting in place privacy measures such as auto purging of records or other expirations is useful in minimizing any data risks. Face records are a mathematical representation of a face stored in a database. Then, an algorithm can do a vector search to find a similar matching face, which is the nearest representation from the equation. A demo and technical overview of an example face search solution can be found here: Postgres As A Vector Database: Billion-Scale Vector Similarity Search With pgvector - Sefik Ilkin Serengil. Understanding the technology behind it is critical as you will see its pitfalls and ability to identify faces falsely. When using face duplication detection, you should still have a secondary human review to ensure the system accurately detects the dupe. We can combine multiple leading facial recognition algorithms for customers who want an extra review to get an accurate face score. One case could be an identical twin, in which many algorithms falsely accuse the other of being a dupe. (Is the iPhone X's Facial Recognition Twin Compatible?) In this case, even with Face ID recognition, which uses advanced 3D checks, the system still thinks the person is the same, allowing the authentication.

As can be seen, facial duplication detection is another crucial security tool when verifying people. You may uncover new fraud networks when enabled, which helps prevent future cases of ban evasions. There are some pre-built methods, such as Google's Celebrity Recognition (Celebrity Recognition Case Study | Google Cloud Skills Boost). However, those checks will only uncover the most obvious faces that may be fraudulent. Depending on your business, you can create a prepopulated database with images of people that should be reviewed. i.e., Suppose you are protecting a sensitive location. In that case, you might already have a list of pre-authorized persons and blocked people based solely on their facial biometrics to ensure even fake or stolen IDs do not bypass security checks. Likely, this usage of technology will increase rapidly with fewer checks, as almost anyone can deploy it at this point. There already have been a few rudimentary use cases (I-XRAY: The AI Glasses That Reveal Anyone's Personal Details just from Looking at Them - YouTube); however, nonconsumer robust solutions exist today that work much better due to their sizing of facial biometrics. The real threat of abuse of this technology comes when the users who control it abuse it for more power over tracking and identifying others without consent or review. One can imagine a scenario in the future where a drone is automated completely to neutralize a target upon identification, which can never be 100% accurate when using a face alone. Companies will claim that thermal, gait, and body features can be used to improve accuracy, but no technology is ever foolproof. There is always a bypass or flaw. Consent is a significant requirement that should be explained before and after in clear terms to allow for the revocation of these attributes. In review, these methods allow for powerful tracking and should be implemented carefully to ensure they are used appropriately.