Countering AI-Generated Attacks with Human-Built Innovation

As more AI models and tools are released into the wild, they are becoming visually realistic and easier to use. When a model becomes optimized to run on lower-end hardware and meets realistic capabilities, it starts to gain critical mass to be used for fraud. Most fraudsters do not take the time to learn the intricacies of using AI tools and instead want a point-and-click solution that returns the most significant ROI. The market demand for high-quality fraud tools is massive and will steamroll many legacy security solutions soon. Already, we are seeing the early effects of this for traditional liveness and deepfake detection. Just one year ago, most anti-spoofing models quickly detected many deepfakes. Now, we can defeat all passive models with less than 30 minutes of work and little to no technical setup using free tools. GenAI's realism surpasses most security measures, and in a red vs blue scenario, the bets will almost always be placed on the attacker.

We will explore how businesses and people can stay ahead of GenAI and future AI-assisted fraud. Businesses and people are pouring money into AI, the path to optimal growth. Using GenAI tools, you will understand their power but also recognize their fallacies, where they can eventually be outsmarted or tricked by a single person. Similar to virtualization in the computer environment, putting AI fraud into multiple container layers is critical. Understand that it will break out of one layer but have another level of defense ready to frustrate the bad actor. You may even contain and observe a bad actor to gain further data on their strategies and use that to build more secure solutions. We have additional logging and can backtrace each interaction to see the exact issue and construct remediation. This strategy has worked effectively for many clients who appear one step ahead of fraud, making fraudsters go elsewhere. Allowing attackers to believe they have the upper hand leads them straight to future traps.

Stopping fraud at the door is impossible, will cause more friction and negative reviews than it is worth, and will cause financial loss. For example, Stripe employs this model with their merchant onboarding, where many can sign up quickly with minimal KYC checks. However, if the merchant does anything odd or has increased chargebacks, they will step up the account review. Instead, an interesting approach can be made through sandboxing the user, where they believe they defeated a security measure but later require them to do a more intensive check at a random time uncorrelated to any prior actions they committed. Patience is vital in some of these scenarios as it leads to doubt by the attacker and doesn't give them concrete data. Fraudsters and GenAI like stability, as that gives them the advantage of maintaining their lead. It also instills a sense of complacency in them, preventing them from training and improving their tools too much. Security solutions can't deploy their best methods against each attack as that gives insight into how to counter it. Instead, it would help if you were calculative in how you approach each attack. For example, we will not fight fire with fire in GenAI, in which we describe how deepfake models compete against themselves in the appendix. Humans will also fail to go head-to-head with AI using brute force or quick modeling. Instead, you must throw a curve ball and mess up their entire plan. Putting all your eggs in one basket for AI is a fool's game and will lead to an eventual breakout scenario.

Looking into the psychology of the attackers, you begin to understand their reasoning and methods, which causes them to focus on short-term workarounds. A fraudster is seeking immediate gratification through a stolen wire or a sock puppet account, and they need to work quickly to earn and extract value. These types of ventures are highly volatile, and usually won't have the time to invest in leading trends, so you must appear one step ahead but have unlimited ideas to counter a threat. Embedding personas and reconnaissance within these fraud communities is critical to ascertain new trends.

Some companies also believe that once their AI hits supposed singularity, they can sit back and relax as there is no way a human can outsmart their tool. This is another pitfall that will lead to the demise of many security solutions. Putting enough focus and attention on a problem can eventually lead to a method to bypass it altogether. The pace at which countermeasures will need to be deployed will increase and require more tuning. The companies at the forefront of liveness checks apply friction with real-time feedback to determine how they want to detect a deepfake. For example, some might require lighting checks, head turns, or even a response that GenAI prompted. In these cases, it helps counter pre-built attacks as the requirements are challenging to build for all. Real-time spoofs can also be defeated, similar to our discussion in previous blog posts.

Obscurity is a tremendous offensive counter for AI attacks as, in the end, they are usually well-trained models on known data. Thinking outside the box is where humans and fraud prevention solutions can still have the edge. Going one step further on the above, we have seen the benefits of allowing a cohort of bad actors to stay active to mark the greater network. The cost-benefit analysis makes it better to keep the bad actors easily identifiable and prunable. As seen in one attack by North Korea, they swapped faces for KYC at a crypto company, but they didn't change the T-shirt used by all of them. Doing deep analysis of photos and creating fingerprinting on different sections of the images can help identify these scenarios. Using GenAI to generate labels and other identifiers, you can start making a graph that links many verifications. However, using something like ControlNet, you can apply many different diffusers that add enough randomization that can't be tracked.

GenAI should also be expected to self-improve, but that doesn't stop it from poisoning defenses. An AI agent could constantly be fed images from social media that were scrapped, providing new faces that wouldn't be detected when transformed into a usable KYC deepfake. However, the quality of that data can be severely hampered by forensic-level tracing, as detailed in this paper Beyond Deepfake Images - Drexel University - CVPR WMF 24 (ductai199x.github.io). Multiple digital signatures exist on everything, and anonymity is never permanent, especially when new tools train on legacy data. Using novel techniques to undercover fraud can help identify existing threat actors that were able to bypass earlier security measures. Providing false feedback is another method to trick GenAI for verification. One way is to notify the user that instead of an image failing a liveness check as a deepfake, it can be alerted that the picture is too blurry or dark. This type of manipulation is similar to how security researchers bypass some AI tools now by invoking hallucinations to jailbreak them. Putting fraudsters through more rigor, which Trust Swiftly has adopted as its core ethos since inception, is critical to ensuring any bypasses are limited in scope.

Another essential concept is not to believe that GenAI is black magic and to trust it since its complexities are immense. This is the approach fraudsters and tools want you to think so that others can not build similar or outperforming models. A human mind will always remain a bigger puzzle than a system that can be bifurcated from beginning to end. GenAI has a signature and will continue to have one that can be tracked and countered. As the mimicry becomes more indistinguishable, subliminal authentication methods will only be shared privately. Similar to how Microsoft Defender security controls which apps are authenticated by their signatures and black box detection, identity systems will also need an approach to have policies around which types of devices and images to accept. Enough entropy is available for a human to use, making these strategies work at detecting AI. This goes along with previous comments where time is on the side of human bourn solutions as AI breaks out for a little while and, eventually, is put back in a box with even more containers.

As can be seen, humans still have the option to outsmart any AI system through innovation and evolving ideas. Having a static security strategy allows for predictable outcomes. Instead, keep a handful of cards that can be placed down only when needed to trump the bad actors. Similar to the picture at the beginning of the article, a person needs to have their minds and systems covering many places. KYC fraud prevention is multifaceted, requiring a constant feed of ideas and experiments to keep it secure. In the end, GenAI is one entity whose purpose is defined, and people can be a network of ideas who are able to work together to overcome any obstacle.

Appendix: Research Notes

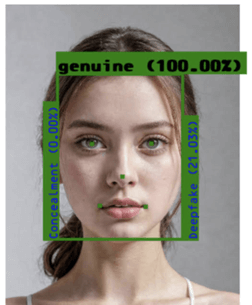

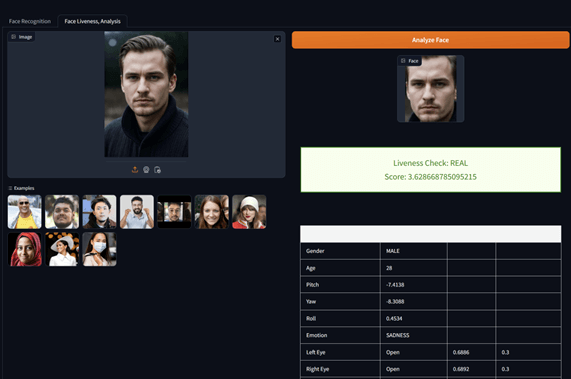

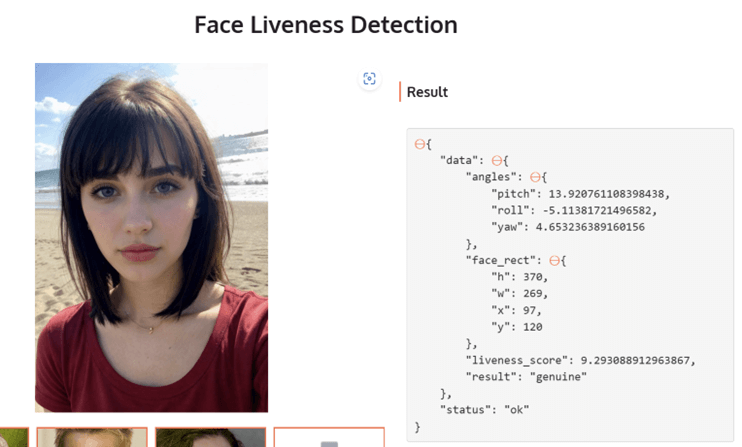

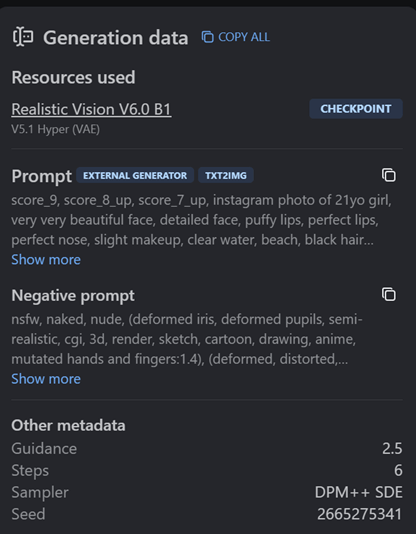

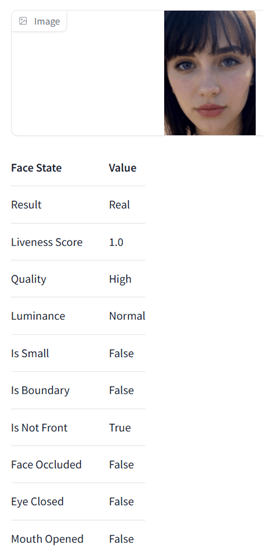

The below shows some of the images and generated selfies that were used to bypass deepfake detection. Tools like Stable Diffusion, Huggingface Spaces, and Github are simple and can be used to commit fraud. C0untFloyd/roop-unleashed: Evolved Fork of roop with Web Server and lots of additions (github.com) The LivePortrait model is also another impressive release Live Portrait - a Hugging Face Space by KwaiVGI that will pave the way to better quality spoofs.

These tools and technologies will continue improving rapidly because they apply in many scenarios. Many use them for streaming, artwork, social media, and other benign activities. However, they can be easily modified into offensive measures such as anti-KYC. This puts the odds further in the attacker's field as they are using the work done by top AI researchers and developers.