Blocking Greater than 1% is Bad Business

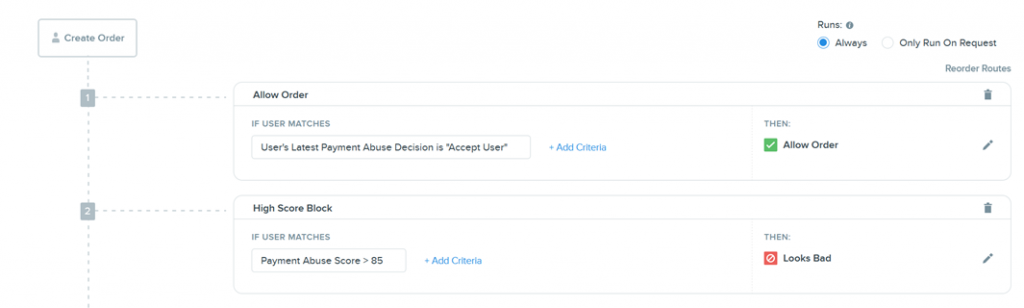

Many businesses might have rules that look like the below when dealing with fraud. They see a risky user and decide to block them without even giving them a chance. Or they put them through a slow manual review. In today’s, online business users expect results fast, and even a few minute delay is enough to lose a customer for life. Most businesses spend a lot on conversion, and sometimes you only get one shot at delivering a good experience. This has led us to the concept of blocking greater than 1% is bad, but so is manual reviews for the average 15% of your users. Blocking Risky Orders

Blocking Risky Orders

How can businesses start balancing customer experience and fraud?

It starts by understanding why you are blocking users, and if those reasons still hold today. Many rules, or even machine models, learn from past behavior. While this works very well for repeat fraudsters, good users eventually get caught in the mix. Typically, a good user won’t give you feedback that they were blocked falsely or didn’t enjoy your identity review policies. They might never respond, so you don’t understand the true scope of your false declines. The advanced fraudsters are still getting by your security since their attack costs are minimal, and their available identities are limitless. Adding in increasing monetary costs to the attackers will be enough to persuade them to look elsewhere.

What about all the fraud committed by real identities?

For many, the most damaging fraud is from real users and not fake or stolen identities. From global surveys, it isn’t actual hackers, but instead, normal users either act maliciously or as honest mistakes. Businesses need to focus on the fraud that impacts the bottom line, and for most, it is not the carder or occasional fraud ring. Collecting a little extra information on your customer might be enough to persuade them not to defraud you. Your customer might think twice before filing that chargeback when they know they verified their voice when making a large purchase. Then if they do make a case of friendly fraud, you have an extra piece of information that might win you the dispute.

What about customers rated poorly by intelligence tools via networks?

Another problem with consortium models (businesses anonymously sharing data about people) is a user might be marked bad by one business, therefore, raising their risk scoring to yours, which eventually leads to a blocked transaction. The problem though, is we don’t know why the user was marked bad in the first place. Were they breaking a rule that might not matter to your business? The only information we get today is maybe that the user’s IP or email is bad, so perhaps you should also block them. Or maybe that user was hacked and someone used their IP as a launching point. This causes negative actions to the user, who might be good. Instead of them having a frustrating experience trying to prove their innocence, companies can give them an easy redeeming trust verification.

AI Mapping out Fraud

AI Mapping out Fraud

Are there downsides to fraud machine learning?

Machine learning models work well until fraudsters can nail down what data they need to mimic a good user. Once there is a time when fraudsters can automate this, there will be a need to add different types of friction. Another dilemma will be further privacy concerns that empower users to share less information, which decreases the available signals to detect fraud. Furthermore, during a new fraud attack, your machine learning will take time to identify a pattern to cause raised risk scoring. The ability to collect a unique identity attribute that is fed back to your fraud tool will stop the attack. For example, you might experience a surge of orders that many appear to be fraudulent, which all become blocked. Instead, the better approach is to challenge them and gather more signal intelligence on the transactions. When a site becomes DDoS attacked on Cloudflare, you don’t see the good ones blocking a lot of traffic. Instead, they can set up a series of challenges being either JS or captcha checks. This same approach should be applied to identity verification and fraud management too.

Lastly, machine models typically can’t get to the less than 1% block rate companies aspire. After thorough reviews of blocked people, many will find that a good chunk of them was legitimate. An eCommerce client of ours became frustrated with the number of false positives by their fraud tool. One example they found was the risk scoring of a person rose exponentially because their card kept getting declined and was doing multiple attempts which might appear as card testings by fraudsters. However, on the second review, the user was normal and didn’t need to be blocked. Their team wasn’t large enough to investigate them always and sometimes only found out when they read a bad review online. Instead, they were able to remove some of their security rules like CVC and 3DS requirements with the confidence that fraudsters would still be identified by Trust Swiftly.

How do we stop synthetic identities without blocking them?

Synthetic identities are another hot topic in the identity world. Fraudsters might use a combination of real data with fake information. To keep it short, no silver bullet stops them. Fraudsters have too much data available today that provides them with unlimited opportunities. Instead, dynamic verifications can start to uncover the fake people. Continuous monitoring with added friction usually is enough for a slip up which is sent to your machine learning fraud tool. Patterns will eventually develop as you supercharge your fraud tool with more data than the fraudsters have.

What is the path forward to getting to a less than 1% block rate?

Merchants need to rethink their approach to fraud and start looking at areas to improve. If you are blocking or manually reviewing more than 1% of orders, then you haven’t created an enjoyable experience for your employees or customers. The future is dynamic friction that keeps verification costs low, makes customers happy, and stops fraudsters swiftly.